A Letter to Roger Penrose

To: puzzles@penroseinstitute.com

Subject: This Puzzle Is Not Even Wrong

Dear Prof. Penrose and the Penrose Institute,

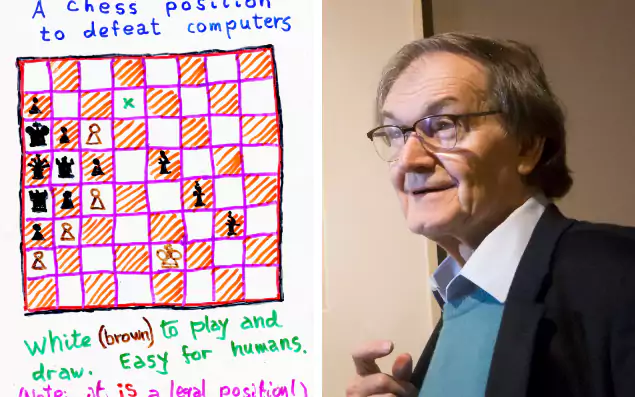

I wish to comment on your chess puzzle

which came to my attention through Sarah Knapton's recent piece in the Telegraph.

The presentation of this puzzle in the Telegraph piece is wrong headed in several distinct ways.

First. the puzzle tells you nothing about consciousness. Of course, I know nothing about consciousness myself. But nothing about this puzzle adds anything to that. At most it's a kind of illusion. Fun and amusing, as puzzles often are, but not more.

Second, it's obviously mistaken to suggest computers "assume" anything at all about the puzzle, or "struggles" in any way, much less struggles because the position "looks" impossible.

Practically all modern chess engines have been selected to do one thing: chose the best move. They do not give any attention to who is winning. The absolute value of their evaluation function is utterly irrelevant. Only the relative values in comparing one possible move vs. another matter at all. And at this task, these engines are very, very good indeed.

Any of the top chess engines can take either side of this puzzle against any comer, human or non-human, and achieve essentially perfect results. Let the engine play black and it will win against imperfect play, or draw, but never lose. But let the engine play white and it will also never lose.

Humans, practically all of whom are conscious (US Republicans excepted), will not do nearly so well.

Chess engines will also perform flawlessly on a task they were not specifically selected for: ask which side would they rather play? Overwhelmingly, chess engines will choose black. Which is correct. White need only make a single error and black can win. But for black to lose requires practically an intention to lose.

The chess engine just lives and evolves in a very narrow environment. The evaluation function serves as a primitive "want". It makes choices, survives, and spawns a next generation, with the aid of a kind of symbiotic assistance from human programmers. Or not. Given time and sufficient resources, surely more complex systems will evolve. Just as living systems do. Perhaps precisely as living systems do.

It is not lost on me, however, that your real goal here is to identify human brains worthy of study, in an effort to understand the brain and, in particular, consciousness. And if I've seemed critical, I assure you it is a narrow criticism.

Consciousness. What is it. How does it arise. These are questions I applaud you for tackling.

Commentary